第2关-k8s集群搭建kubeadm

简单理解:

docker默认是单机使用的,不可以跨主机,也没有高可用,所以生产环境一般不会单独使用docker跑应用,k8s主要是作为docker的一个调度器来使用,可以使容器实现跨节点通信,当一台运行容器的节点故障后,容器会自动迁移到其它可用节点上继续运行服务,目前比较常用的是k8s

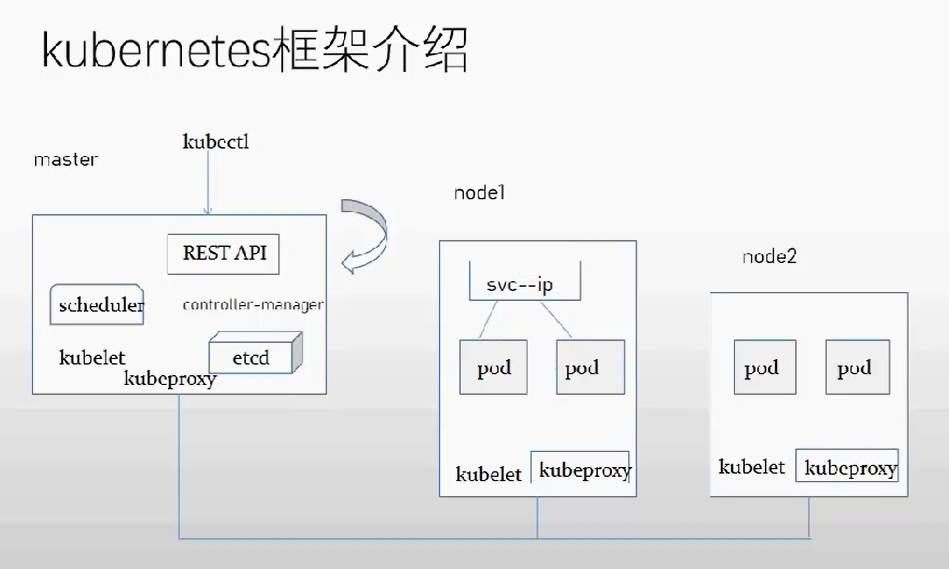

k8s架构:k8s主要由master节点和node节点构成,而且常用操作都在master上操作,作为控制节点角色,node节点提供计算功能

master:

kubectl:k8s的所有操作都是通过kubectl指令操作的

REST API:k8s对外部服务提供的接口服务,例如图形化界面或者kubectl都会通过REST API接口下发指令来控制k8s

scheduler:调度器,例如创建pod,scheduler可以控制将pod分配到哪个pod节点

controller-manager:检测pod或者node的健康状态,并维持pod的正常运行,如果pod故障,controller-manger会自动修复,例如在启动一个pod副本

kubelet:代理软件,例如在master上对node节点下发的指令,都需要通过kubelet组建来告知各个组件

etcd:数据库,所有配置数据都存放在etcd数据库中

kubeproxy:在所有节点都需要运行kubeproxy,后期通过创建svc来将pod映射到外网,当外部通过svc-ip访问pod的时候就需要通过kubeporxy进行路由转发到pod

node:

pod:k8s环境运行的最小单位,一个pod中可以包含一个或多个容器

安装方式:

kubeadmin(生产)

yum

源码安装(学习,深刻理解)

开始安装:

初始化各个节点的配置:

安装常用工具

yum -y install wget telnet net-tools lrzsz vim zip unzip

修改主机名

将master节点主机名修改为k8s-master01 node节点为k8s-node01 以这种命名规则命名即可

关闭防火墙

[root@k8s-node01 ~]# systemctl stop firewalld && systemctl disable firewalld

永久关闭selinux

[root@k8s-node01 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config;cat /etc/selinux/config

临时关闭selinux

[root@k8s-node01 ~]# setenforce 0

配置hosts

[root@k8s-node01 ~]# cat >> /etc/hosts <<EOF

10.1.1.100 k8s-master01

10.1.1.110 k8s-node01

10.1.1.120 k8s-node02

EOF临时关闭swap

[root@k8s-node01 ~]# swapoff -a

永久关闭swap

[root@k8s-node01 ~]# vim /etc/fstab

配置yum源(自带的kubernetes版本太低)

rm -rf /etc/yum.repos.d/*

cd /etc/yum.repo/

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repovim k8s.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgyum clean all && yum makecache

安装docker

[root@k8s-master01 ~]#yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master01 ~]# yum -y install docker由于内核不支持 overlay2所以需要升级内核或者禁用overlay2(我们选择禁用,安装完docker可以启动docker测试下是否支持,启动docker不报错的可以忽略这一步)

vim /etc/sysconfig/docker 将 --selinux-enabled=false

启动docker服务

[root@k8s-master01 ~]# systemctl start docker && systemctl enable docker

设置服务器时区

timedatectl set-timezone Asia/Shanghai

设置相关k8s参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOFsysctl -p

echo "1" > /proc/sys/net/ipv4/ip_forward

echo "1" > /proc/sys/net/bridge/bridge-nf-call-iptables安装k8s相关安装包 这里安装的是1.23.4版本,可以根据自己的需求指定版本,前提是你yum源支持 使用 yum list kube\* 来查看当前yum源支持的最新版本

[root@k8s-master01 ~]#yum -y install kubeadm-1.23.4 kubelet-1.23.4 kubectl-1.23.4

启动服务

不需要启动kubelet 只要enable[root@k8s-master01 ~]# systemctl enable kubelet

====================================以上操作在所有节点执行,如果新加node节点也需要执行上述操作================================================================

开始初始化k8s集群:在master上执行

$ kubeadm init \

--apiserver-advertise-address=10.1.1.100 \ #master组件监听的api地址,这里写masterIP地址即可或者多网卡选择另一个IP地址

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.4 \

--service-cidr=10.2.0.0/16 \

--pod-network-cidr=10.244.0.0/16初始化完成后kubelet服务如果没异常的话应该正常启动了

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config查看nodes状态kubectl get nodes

安装flannel网络

几个坑

https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml 这个yml文件有几处要改的地方,

1.rbac.authorization.k8s.io/v1beta 改为rbac.authorization.k8s.io/v1

2.extensions/v1beta1 改达 apps/v1

- 所有apps/v1 要加入选择器 selector 如下

spec:

selector:

matchLabels:

app: flannelkube-flannel.yml 代码如下

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

kubectl apply -f kube-flannel.yml

遇到问题,执行kubectl get pods -n kube-system查看到flannel容器拉取镜像错误,在master节点上手动拉取镜像后再次执行kubectl apply,查看到flannel网络运行正常

docker pull quay.io/coreos/flannel:v0.11.0-amd64

kubectl apply -f kube-flannel.yml=====================================================master节点部署完成==================================================================================

将node节点加入到master集群中,在需要加入k8s集群的node节点上执行该命令

kubeadm join 10.1.1.100:6443 --token jib7mk.mybh3vpbfcf2tgw0 \

--discovery-token-ca-cert-hash sha256:e5ab611a21d0442c1f25b9f88eaf5cddc66ad906d206163f2630d6228efa733如果忘记kubeadm join后的参数,可用通过下面命令来获取

[root@k8s-master01 opt]# kubeadm token create --print-join-command

问题1:

执行完kubeadm join后在master节点执行kubectl get nodes 发现k8s-node01节点状态为NotReady

解决方法:在node节点查看日志,tail -f /var/log/message或者journalctl -f -u kubelet发现有报错日志如下

Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized通过日志可以看出是网络组件出现的问题,然后在node节点上执行docker images,查看到flannel组件的镜像没有拉取到,可用稍微等一下,如果不想等或者等了一会还拉取不到,可用手动拉取docker pull quay.io/coreos/flannel:v0.11.0-amd64

过一俩分钟在master节点上进行验证

在master节点上部署k8s-dashboard组件,该组件为k8s的web ui组件

vim kubernetes-dashboard.yml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ------------------- Dashboard Secret ------------------- #

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard部署该服务

[root@k8s-master01 opt]# kubectl apply -f kubernetes-dashboard.yaml

查看访问dashboard服务的端口号,访问IP使用master节点的IP地址

[root@k8s-master01 opt]# kubectl get svc kubernetes-dashboard -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.103.18.237 <none> 443:31072/TCP 2m33s访问验证:https://10.1.1.100:31072/

如何使用令牌登陆:

[root@k8s-master01 opt]# kubectl create serviceaccount dashboard-admin -n kube-system

[root@k8s-master01 opt]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

[root@k8s-master01 opt]# kubectl get secret -n kube-system|grep dashboard

dashboard-admin-token-pbg4s kubernetes.io/service-account-token 3 96s

kubernetes-dashboard-certs Opaque 0 48m

kubernetes-dashboard-key-holder Opaque 2 47m

kubernetes-dashboard-token-ksd9f kubernetes.io/service-account-token 3 48m查看token信息

[root@k8s-master01 opt]# kubectl describe secret dashboard-admin-token-pbg4s -n kube-system

登陆成功,部署完成